Building Scoundrel with ~Vibes~

This is a story about vibes. A story about the world we're in, where "anyone can be a developer." A story about the magic of vibe coding.

If you're not familiar with the concept of vibe coding it is, at its core, a fancy wording for "Development via AI prompt engineering" and it is everything you expect it to be. Now, "everything you expect it to be" is going to vary a lot by person. Maybe to you it means "describe a program and the AI will magically create it." Maybe it means "fight with an AI for hours to get basic functionality written terribly." Maybe it even means "The AI is going to steal code from repositories and create something with it that won't do what I want it to do." Well, here's the good news: it is all of these things! Or at least it was in my experience. Now that we have a baseline understanding of vibe coding, let's set the stage for our story.

Or if a story is boring to you and just want the summary, click here for my final thoughts!

It's a Wednesday night. I've been unemployed for about 3 months and the insanity has begun to set in. Recently I learned the game Scoundrel, a clever little basic "dungeon crawler" Solitaire game. The logic of the game isn't too complicated:

1. Remove all hearts/diamonds with a value of Jack or higher (including Aces).

2. Shuffle the deck and deal out 4 cards to the tableau, this is the first "room" of the dungeon.

3. Clubs and Spades are monsters, and deal damage equal to their value (Jack -> Ace = 11 -> 14).

4. Hearts can be used to restore HP, but can't be used twice in a row even if you move rooms.

5. Diamonds are Weapons to equip and can used to fight monsters. The value of the the monster is reduced by the value of the weapon to a minimum of zero.

- Once a monster is defeated with a weapon, the monster is placed on the weapon, and that weapon can only be used against monsters with a value less than the last defeated monster

- You can only have one weapon equiped at a time, and equiping a new weapon resets the stack of monsters defeated

- You can also opt not to use a equipt weapon to fight a monster

6. You have 20 health maximum, and cannot go above that for any reason.

7. After you use 3 cards in a room, deal out 3 more to the tableau, this is the next room.

8. You can skip a room if you haven't played any cards from the room yet, but you can never skip 2 rooms in a row. Skipped room cards go to the bottom of the deck.

9. Used and defeated cards are discarded.

10. You win when you get through the whole deck, and lose if your health hits or drops below 0The rules for Scoundrel

See? Easy set of rules. This isn't a complicated game. Great, moving on. So I recently learned the rules to play this, and my first thought is "I've wanted to learn Unity, this seems like a great project to do that!"

But this isn't the story of "Devin learns Unity," this is the story of "Devin uses an AI to build Scoundrel." So we jump forward a couple weeks, learning Unity has been going well, but most of what I'm learning there is more of "How do I connect game objects to elements in a scene?" and "Why doesn't Unity have good hot reload of classes?" Needless to say, it was going great. It was about this time that I started hearing the term "vibe coding" floating around. People using AI tools to build entire applications, CEOs starting companies without any trained devs, talking to LLMs to generate whole products, it all sounded so. . . so. . . so very, very stupid.

I said to myself "No way this is a real thing, I use LLMs for auto-complete and occasionally it does a decent job of generating documentation, but an entire bespoke program? No chance in hell. A neat parlor trick at best!" After which I wrote it off. Then I realized I had the free tier of Github Copilot. "Oh cool, I guess I can use this to try out how vibe coding works and see if it's worth the hype."

But I needed a project. Something I had a firm grasp of but wasn't so large that I would spend more than 30 minutes on it. Something with a set of clear logical rules and requirements. Something I could easily tes- ok, you get the point. I decided to use it to build a version of Scoundrel. But I decided to stick to a language set I knew well and went with HTML + JS. Not React, not Vue, just straight HTML + JS. This would let me quickly look through the code to judge its quality, identify bugs, and run the whole thing with a simple page refresh. A perfect little project. Furthermore, I wanted to set two clear rules for this:

- I am allowed to examine the code and make suggestions based on any bugs/issues I identify, but I am not allowed to actually write any code, all of the code needs to be generated by the LLM.

- I'm not allowed to examine the code until the LLM fails to fix the problem after being specifically requesting a fix and failing to get it.

I know I went over what vibe coding is at the start of this, but let's actually give an example of how it works. Like any LLM everything happens in "turns". I provide a prompt, and I get a response back. This full exchange is called a "turn." Single turn prompts are the most common use case for LLMs. You ask a question "What is the capital of the Philippines" then the LLM responds with an answer "The capital of the Philippines is Manila". A multi-turn prompt would be if I continued the conversation, building on the previous prompt "And what are some things to do there in March?", letting the LLM use the built-up context to answer that question and others I might ask as the conversation continues. Using this build-up in context the LLM can build on its previous responses and edit the code using new and old information. With Github Copilot specifically I asked it to generate me a Hello World program in Kotlin and it created a file containing the following code:

fun main() {

println("Hello, World!")

}Not even the simplest Kotlin "Hello World" code

And then I instructed it to simplify that to use the equals operator for functions. It adjusted the file to be:

fun main() = println("Hello, World!")Simplicity!

And there we go, vibe coding. But, of course, true vibe coding wouldn't have asked it to change the function to make it simpler. I'd have left it, because it's not my job to write the code. My job is to tell it what code I want and when something is wrong! Couldn't be easier, right? Let's see how it actually went. (Oh, and also, the above is from my actual experience, not a drummed-up example)

Scoundrel, the Vibening

I decided to start off simple enough, greeting Copilot, explaining what we were doing, providing the rule set as written on BoardGameGeek, and giving it a language constraint (HTML Canvas + JS). Things, to my surprise, started off pretty well, with the LLM generating a valid index.html file and the start of our game.js file1. On a first pass over the code, the HTML is fine, and the JS is functional. There are a number of odd choices that the LLM makes, for example:

let playerHealth = 20;

// Initialize the game

function initGame() {

deck = createDeck();

shuffleDeck(deck);

playerHealth = 20;

// Additional initialization code will go here

}Can't see this being a problem in the future!

For whatever reason, the LLM decided to set playerHealth = 20 twice. The initGame function is called before the game actually starts running, so we didn't need to set it prior to that. But it's fine, we're vibing! To be fair to the LLM though, it also made a number of very good decisions. For example, the createDeck and shuffleDeck functions were all built with the logic of the game in mind:

// Create and return a shuffled deck of cards

function createDeck() {

const suits = ['spades', 'clubs', 'diamonds', 'hearts'];

const values = [2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14]; // Face cards are valued 11-14, Ace is 14

let deck = [];

for (let suit of suits) {

for (let value of values) {

deck.push({ suit, value });

}

}

// Remove Jokers, red face cards, and red aces

deck = deck.filter(card => {

if (card.suit === 'hearts' || card.suit === 'diamonds') {

return card.value < 11; // Remove red face cards and red aces

}

return true;

});

return deck;

}

// Shuffle the deck using Fisher-Yates shuffle algorithm

function shuffleDeck(deck) {

for (let i = deck.length - 1; i > 0; i--) {

const j = Math.floor(Math.random() * (i + 1));

[deck[i], deck[j]] = [deck[j], deck[i]];

}

}Look at that, needed code!

It correctly sets up the suits and creates a deck of "card" objects, avoiding the red face cards and aces. Very nice! For the shuffling it opted to go with the Fisher-Yates algorithm since JS doesn't have a built-in shuffle function. Sure, we're not doing a cryptographically secure random number, but we're also not writing for a casino. The end result of all of the HTML and JS generated in this first pass is a canvas that displays the player's health and a shuffled deck of cards behind the scenes. Not a bad start!

Over the next couple of turns I asked it to add a number of things.

- Dealing out the cards

- Changing the cards to show an ASCII suit icon rather than the actual name

- Adding a handler for hearts (the easiest card type to implement)

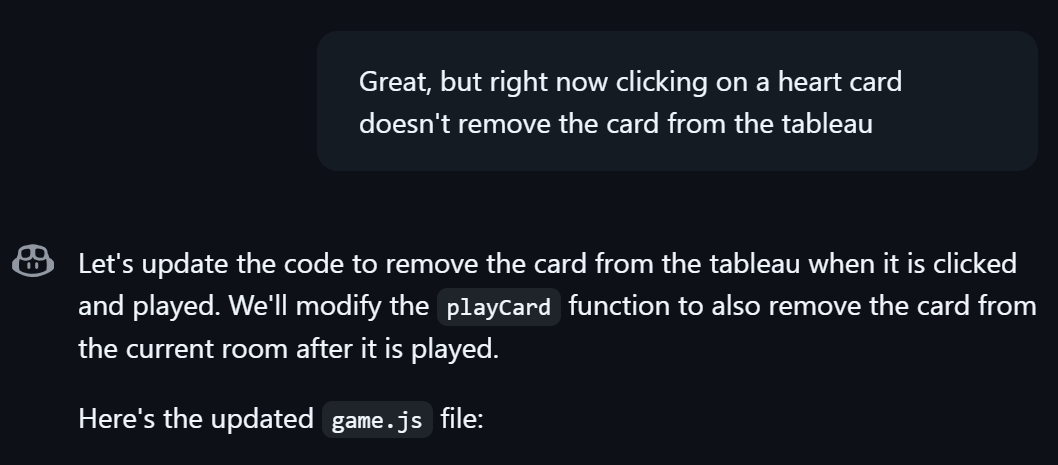

Here we ran into our first problem. While the code is dealing out the cards correctly and showing the right suit, clicking on a heart card isn't removing it from the tableau. "Finally," I thought to myself, "A real test for the LLM, having to fix a bug!" And so, I asked it to address the specific issue:

And there we go, problem fixed! Or was it? Surprisingly, yes! After just one turn the bug was successfully squashed. Surely this will be a consistent and reliable trend! From here I moved on to the next card type I wanted to address, the spades and clubs. Immediately, we ran into another issue: while clicking on a spade/club does reduce my health correctly, the clicking is unreliable. I spent a little bit of time trying to figure out what the issue was and fed the LLM my best-guess. To Copilot's credit, it did what any good developer would do and threw in debug statements and asked me for more info. It also decided to randomly add logic for moving to the next room. This was my first experience with what I have dubbed "The Rush Job Protocol," where the LLM decides that it wants to move on to something you haven't yet asked for because it knows it's coming, despite there being existing errors, much in the same was an engineer might move on without fixing their existing issues.

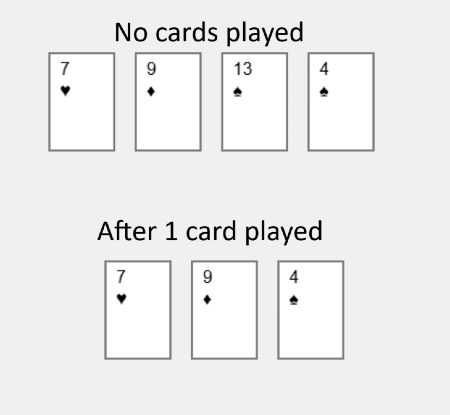

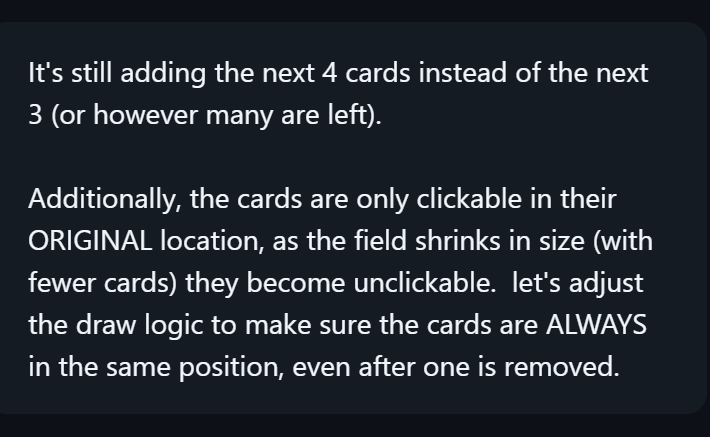

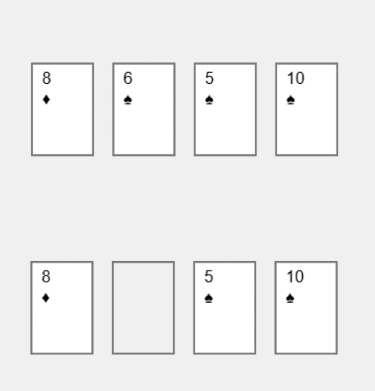

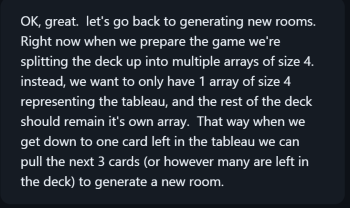

Here, I made a mistake. Rather than focus on figuring out the unresponsive card bug, I swapped to a new bug the LLM introduced when adding the "new room" logic. The logic implemented dealt out 4 new cards to the tableau instead of the expected 3 (remember, the last card in a room is used as the basis for the next room, so you only deal out 3 extra cards!). After another round of invalid fixes for the new room issue, I did finally figure out why the card clicking was inconsistent. The "clickable area" on the canvas to trigger a card to play was tied to where cards were originally, but the current implementation of the tableau had the cards shrinking inward with every card played:

Due to this, the clickable area no longer matched up with a card's location! For this I wanted to test providing a very direct change I wanted made, similar to if I were to be talking to a junior engineer.

This direction was enough for the LLM to mostly solve the problem. No longer was the tableau shrinking, instead, the cards themselves were shifting left as space opened up. However, while this does work, it's not exactly what I wanted from a UX perspective. I wanted the cards to retain their original position at all times so there wouldn't be as much odd movement of the cards on screen. This took two rounds to resolve, the first round I simply explained what I wanted, but it took a round of suggesting using a null placeholder in the array to get the desired result. The LLM also managed to delete the Health tracker on the canvas during this as well, which it fixed after I pointed it out.

This was the first full set of fixes that I really started to feel the limitations of the LLM. I described it to a friend as "feeling like I'm talking to a lazy developer." To put it more simply, the LLM creates code, "assumes" it works, and passes it off as if it does, much like a lazy developer. It was also the first time I felt like my experience as an engineer and my understanding of how the code worked made a difference in how long it took to correct the issue. I have a sneaking suspicion that someone without technical knowledge tried this they would have spent quite a while trying to get the LLM to understand what needed to change.

Next on the list was implementing the functionality of diamonds. This was easily the most painful bit of logic to implement. On the surface it should be easy:

5. Diamonds are Weapons to equip and can used to fight monsters. The value of the the monster is reduced by the value of the weapon to a minimum of zero.

- Once a monster is defeated with a weapon, the monster is placed on the weapon, and that weapon can only be used against monsters with a value less than the last defeated monster

- You can only have one weapon equiped at a time, and equiping a new weapon resets the stack of monsters defeated

- You can also opt not to use a equipt weapon to fight a monsterAs a reminder, the rules were like 2200 words ago after all!

But I was, at this point, still relying on the definition of the rules laid out by BoardGameGeeks.

Weapons may be equipped to attack multiple monsters, as long as each defeated monster is of lower value than the last monster defeated with that same weapon. To attack a monster of higher value, a new weapon must be equipped. Monsters can also always be attacked barehanded, but this depletes the player's health for the full value of the monster.Copyright here goes to BoardGameGeeks!

While this is absolutely human readable, the LLM struggled with it quite a bit. The logic either didn't work, or worked but missed portions, or worked but missed key edge cases, or worked but deleted seemingly unrelated logic, etc. It took 9 turns, a bit of code sleuthing, and two attempts to explain the rules in a more parseable way for the LLM before it finally got right. Again: Lazy Developer.

Finally, with diamonds, hearts, clubs, and spades all working correctly, it was time to get back to the "room not repopulating correctly" bug. As a reminder, this manifested in the tableau ending up with 5 cards in it rather than 4. At this point in the night, I was sick of fighting with the LLM and resorted to early code sleuthing. In my defense, I had attempted to fix this issue during an earlier turn, so I think I'm justified here. The issue was immediately obvious to me, can you spot the issue?

function initGame() {

deck = createDeck();

shuffleDeck(deck);

playerHealth = 20;

rooms = createRooms(deck);

currentRoomIndex = 0;

lastCardPlayed = null;

equippedWeapon = null;

lastMonsterDefeated = null;

drawGame();

}

// Create rooms from the deck

function createRooms(deck) {

let rooms = [];

while (deck.length > 0) {

const room = deck.splice(0, 4);

rooms.push(room);

}

return rooms;

}Remember: a new room is created when there is 1 card left, to a maximum of 4 cards!

That's right, the createRooms function is pre-splitting the deck into rooms of size 4. While this is obviously incorrect to an engineer familiar with the rule set, this is the kind of thing that people without a technical background are unlikely to recognize. Once I had the problem space figured out it only took a single, very specifically phrased, prompt to rectify the problem.

At this point, the hardest part of the game logic was done. From here on out it was about adding interactivity outside of the canvas. We needed a "Skip Room" function, a "Fight Barehanded" function, an action log that shows what the player has been doing, Start Game, Game Over, and Victory screens, and a quality-of-life change of displaying red suits as red and black suits as black. None of this is particularly complex, most of it either barely modifies existing code (skip room, barehanded fighting) or is nearly entirely HTML manipulation. Should be quick, right?

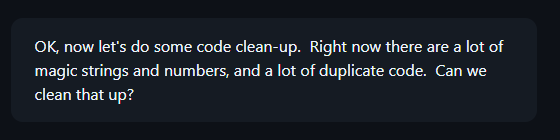

This took me EIGHTEEN turns. For 6 very minor features it took 18 turns. And worst of all? There was SO much duplicated code. The LLM very clearly does not believe in DRY. A great example of this is the functions for drawing the victory, game over, and start screens.

// Draw the title screen

function drawTitleScreen() {

ctx.font = '60px FantasyFont, cursive';

ctx.fillStyle = '#000';

ctx.textAlign = 'center';

ctx.fillText('Scoundrel', canvas.width / 2, canvas.height / 2);

}

// Draw the game over screen

function drawGameOverScreen() {

ctx.clearRect(0, 0, canvas.width, canvas.height);

ctx.font = '60px FantasyFont, cursive';

ctx.fillStyle = '#000';

ctx.textAlign = 'center';

ctx.fillText('Game Over', canvas.width / 2, canvas.height / 2);

skipRoomButton.classList.add('hidden');

fightBarehandedCheckbox.parentElement.classList.add('hidden');

startGameButton.classList.remove('hidden');

}

// Draw the victory screen

function drawVictoryScreen() {

ctx.clearRect(0, 0, canvas.width, canvas.height);

ctx.font = '60px FantasyFont, cursive';

ctx.fillStyle = '#000';

ctx.textAlign = 'center';

ctx.fillText('Victory', canvas.width / 2, canvas.height / 2);

skipRoomButton.classList.add('hidden');

fightBarehandedCheckbox.parentElement.classList.add('hidden');

startGameButton.classList.remove('hidden');

}DRY? More like DRY your eyes, we're duplicating shit over here!

These three functions could easily have been condensed. It already uses a game state variable to know what to draw, that could have been utilized to simplify this code. Not to mention the magic strings. . .

But it doesn't end there. All over the place in the code for the whole game.js file there are terrible coding choices made. Magic numbers/strings all over the place, duplicated code, mixed domain responsibilities, lackluster design philosophies, it's a mess.

Here's an example of why easily changeable code is important: I wanted to test the victory condition, but the game is difficult. My solution? Adjust my max health to 500 and just click until I'm out of cards. Now, in a human built solution there would be a single "Max Health" variable I could change and I'd be off to the races, but not so here! Remember that code block from much earlier? This one?

let playerHealth = 20;

// Initialize the game

function initGame() {

deck = createDeck();

shuffleDeck(deck);

playerHealth = 20;

// Additional initialization code will go here

}Well, I didn't know about the change in initGame when I started my testing, so I just changed the first variable. Once I saw my health was still 20 I found this line and changed the value. Perfect! I'm good to go now, right? No! Of course not! Remember how in the rules we say that you have an absolute max HP of 20?

// Handle health potion effect

function handleHealthPotion(card) {

if (lastCardPlayed && lastCardPlayed.suit === '♥') {

// Nullify the effect of the second potion in succession

console.log('Second potion in succession, effect nullified.');

logAction('Second potion in succession, effect nullified.');

} else {

// Restore health points up to the maximum starting value

playerHealth = Math.min(20, playerHealth + card.value);

logAction(`Health restored by ${card.value} points`);

}

}You sneaky SOB

See the issue? It's right there, where playerHealth is set to Math.min(20, playerHealth + card.value);. Because the LLM is using magic numbers, if I used a potion after setting my health to 500 it would bring it back to 20! If there was a single "Max Health" variable ALL of this would have been avoided. It's an absolute mess.

And then there's the generation time! For a 419-line JS file it would take over a minute to generate. So those 18 turns took well over half an hour of generate-debug-prompt engineer-repeat. And that was for the EASY part! The whole thing took nearly 3 hours to get through. In 3-hours I could have written the whole game myself, with better coding practices, and I am terrible at using canvas.

After talking with a friend, I was inspired to see how much I could get the LLM to clean up the code to make it more human-maintainable in the long run.

This had. . . mixed results. In its first pass, the LLM was successful in extracting many of the magic strings/numbers out into constants, and did a good job at condensing the duplicate functions into a set of reusable component functions:

function drawCenteredText(text, fontSize) {

ctx.font = `${fontSize} ${FONT_FAMILY}`;

ctx.fillStyle = '#000';

ctx.textAlign = 'center';

ctx.fillText(text, canvas.width / 2, canvas.height / 2);

}

function toggleUIElements(isRunning) {

logContainer.classList.toggle('hidden', !isRunning);

skipRoomButton.classList.toggle('hidden', !isRunning);

fightBarehandedCheckbox.parentElement.classList.toggle('hidden', !isRunning);

startGameButton.classList.toggle('hidden', isRunning);

}Good job, Copilot!

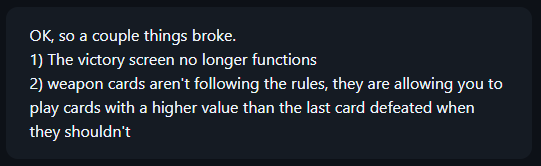

Unfortunately, in doing its clean-up, it also managed to produce code that wouldn't run. After removing the drawTitleScreen it still attempted to call it at the very end of the file. With a quick prompt that too was fixed, only to find that it also broke the toggling of the non-canvas UI elements. Another prompt later and I got to find out that the LLM broke the victory screen condition, and the weapon logic.

The last thing that I needed to have it clean up was the double declaration of playerHealth and extracting a few more magic strings from the canvas draw methods. In total, it took 5 more turns to fix the code, which took about 30 minutes between waiting for the code to generate, QAing the result, and then formulating the next prompt. And that sounds like I'm upset, but honestly the work it did extracting the magic strings/numbers out and condensing methods was exceptionally fast. This was, in my experience, the best part of this tool. But then again, that isn't too surprising. I already use LLMs for this kind of thing in my normal code flow but having an example I could show here is absolutely worth the effort.

So, after a total of 44 turns and ~4 hours of work, the game works and the code is fairly good, but the effort required was probably more than it was worth.

Final thoughts

But as much as I wish I could say that this whole project was a failure, it frustratingly wasn't. While I happen to be someone who understands Javascript and HTML, those skills helped me less than simply understanding basic programming philosophies. I could likely recreate this project in a language I don't know in roughly the same time. Sure, the code would be a crime. And yes, someone who knows the language would get it done in half the time manually. But if I could create something even usable with this amount of logic and user interaction in a totally unknown-to-me language in that short a time, that is absolutely a huge force multiplier. I have no doubt that if I actually took the time to instruct the LLM on how I wanted the code to be improved, it would be able to do it, but knowing how it should be improved is a function of experience. If I had allowed myself to modify the code rather than forcing myself to have the LLM do it I would have finished this all much faster, you can see how just knowing something as simple as how arrays work saved me multiple turns when debugging. Finally, if you're going to do this yourself, do yourself a favor and write a very clear list of rules for the game like I did for you at the start of this article rather than something "human readable;" you'll probably have an easier time.

If you read this far, or if you just skipped down to the "Final Thoughts" section, here's the deal: As an engineer with 15 years of development experience across multiple languages and stacks, I don't think that LLMs are coming to replace good engineers anytime soon. But I also think that if you're foolish enough to write off LLMs as a tool to improve your own development velocity and learning speed, you are shooting yourself in the foot. People who understand both software engineering and LLM prompt engineering are likely to be the most desirable engineers in the future because they don't limit themselves.

And to all the CEOs and CTOs who think they can replace their staff with LLMs, heed my warning: The minute something goes wrong you are going to pay 10x whatever you saved by firing the staff who know what the hell your code does, and can make changes without begging the LLM to fix it. Get your engineers an LLM, maybe even make them do workshops on how to use it properly; if you want return on investment this is the path.

Thanks everyone for reading this post! It was an absolutely eye-opening experience using Github Copilot like this, and I'm definitely going to modify my own workflow to make better use LLMs in the future. I post new blog posts when I find something interesting to talk about, so sign up for updates if that's something you might be interested in!

Cheers!

1 Due to the size of these files, I won't be posting the whole contents here, but you can follow along with my conversation here.