Recursion

Recently I have been getting a lot of questions about recursion, ranging from what it is to when to use it, so let's talk about it!

I hang out in a number of Slack channels. Many of them tend towards the more junior side so I frequently find myself helping newer developers learn (Something I am quite attached to). Recently I have been getting a lot of questions about recursion, ranging from what it is to when to use it, which seemed like an excellent topic for an article.

Recursion: Problem solving without iteration

Recursion is defined as a method of solving problems where the "solution depends on solutions to smaller instances of the same problem." Super clear, right? We can just move on now, yeah?

As with all things Computer Science often what's better than a definition is an example. Something to drive home the concept. For that, let's turn to our trusty old friend: Math. If you've never heard of the Fibonacci Series the idea is that it's a series of numbers composed of the previous two numbers in the series (1, 1, 2, 3, 5, 8, 13...). The traditional function representation of this would be

f(n) = f(n-1) + f(n-2), f(0) = 0 f(1) = 1

Effectively, this is saying "Given a number, we can calculate the function's result by solving for the same problem in its component cases." For example, if we ask for f(4) we can find the solution by calculating f(3) + f(2), in which both functions can be solved in a similar fashion ((f(2) + f(1)) + (f(1) + f(0))). By identifying problems that can be solved this way we allow ourselves to write easy to read solutions that can be represented by pure functions.

But how do I. . . recursion?

Now that we understand WHAT recursion is let's move into the interesting bits, HOW to do recursion. We'll start off slow to get our feet wet, then kick it into a higher gear.

Consider the following problem:

const arr = [1,2,3,4,5,6,7,8,9,10]

//Sum the array

This problem is simple enough for most. The easiest JavaScript is to simply use reduce.

arr.reduce((acc, x) => acc + x)

Nice and simple. For each element in the array we add it to the next element until we run out of elements to add! Super simple, no reason to do it any other way. Unless, of course, we want to be recursive.

Let's think about what we just said, "For each element, we add it to the next element until we run out of elements to add." Given that, then it's sensible to say that to get the sum of every number BUT the first number, we do the same action. But if that's true then it also stands to reason that it's true for the next number as well, and so on and so forth until we're out of numbers. And just like that we've identified a cursive patterns!

But what does that look like when we actually code it?

const sum = (array) => {

if (array.length === 0) {

return 0

} else {

return array[0] + sum(array.slice(1))

}

}

Now, I hear you saying "Oh wow, hey, that's like. . . a lot of code. What is even going on?" so let's just demystify this right up front.

Remember how we defined the recursive sum function? That the sum of the list is the sum of the first item in the list plus the sum of the remainder of the list? That's this: return array[0] + sum(array.slice(1)). The code here represents exactly what we describe. We take the first element of the array and add it to the sum of the remainder of the array, as calculated by our sum function!

You'll notice though that we still have an additional cases to cover though. If we don't stop ourselves we'll eventually hit a situation where array[0] throws an exception because the length of the array is 0. This leads us to a very important part of recursion called a "base case."

Throwing back for a minute to our Fibonacci example, you'll remember that we specifically called out two cases: f(0) = 0 and f(1) = 1. These are important base cases because they prevent the algorithm from running infinitely. When f(0) is called it's not calculated by f(-1) + f(-2), it JUST returns 0. This is the same as what we have to do with our sum function. If array.length === 0 then we can just return 0. This stops the recursion and begins resolving the functions up the stack.

How does recursion. . . recursion?

So now we know what a recursive function looks like, and we know what it is, but what does it actually doing? I mentioned the stack in the last section, and resolving up it, but what does that even mean? Let's look at our sum example and go step by step for a smaller scale array

const arr = [1,2,3]

const sum = (array) => {...}

//First call => 1 + sum([2,3])

//Second call => 2 + sum([3])

//Third call => 3 + sum([])

//Forth call => 0

//Now because the first 3 calls can't resolve without the result of the forth call they are left waiting. Once the forth call happens it reverses up the stack

//Third call => 3 + 0 = 3

//Second call => 2 + 3 = 5

//First call => 1 + 5 = 6

So each time we perform the sum function we need to wait for the result of the next call before we can finish our call. Once the base case is met we can begin moving up the call stack adding the results until we finish our call.

Are there more than one kind of recursion?

This is a common question, stemming from the fact that in programming there is often multiple ways to perform any given action. And just like everything else in programming this is true for recursion as well. Recursion comes in two flavors, "head" and "tail" recursion. Head recursion requires us to finish a calculation of the base case before we can solve the previous step (as in our current sum method). In contrast, tail recursion resolves everything before moving to the next step. Let's see this in action

const sum = (arr, accumulator = 0) =>

arr.length == 0

? accumulator

: sum(arr.slice(1), accumulator + arr[0]);

sum([1,2,3])

//First call: sum([2,3], 0 + 1 = 1)

//Second call: sum([3], 1 + 2 = 3)

//Third call: sum([], 3 + 3 = 6)

//Forth call: 6

As you can see, in this example we're figuring out all the data mutations before moving to the next step. This allows some compilers and run-times to make use a feature called "tail optimization" to prevent a stack overflow, but we'll talk about that later.

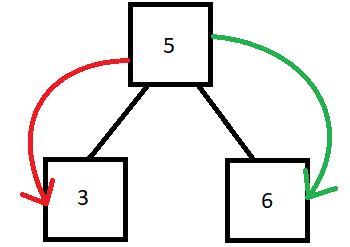

While it certainly comes off as tail recursion being strictly better than head recursion, they are in actuality used for different cases. While tail calls can be optimized, head calls allow for branching paths.

Branching paths, code complexity, and complex problems

So far all of the recursive code we've seen is strictly longer and more complex than it's iterative brother, so what's the point? To answer that, let's look at a bit of a more complex problem: Summing up values in a Binary Tree.

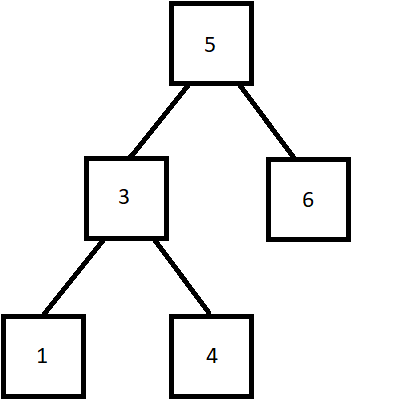

Binary Trees can be represented as a set of interconnected nodes. A node contains three things: A value, a left node, and a right node.

Here is a very basic implementation of a Binary Tree that we can use as our base:

class BinaryTree {

constructor(value) {

this.parentNode = new Node(value)

const addFunc = (value, parentNode) => {

if (value < parentNode.getValue()) {

if (!parentNode.getLeft()) {

parentNode.setLeft(new Node(value))

} else {

addFunc(value, parentNode.getLeft())

}

} else {

if (!parentNode.getRight()) {

parentNode.setRight(new Node(value))

} else {

addFunc(value, parentNode.getRight())

}

}

}

this.add = (value) => addFunc(value, this.parentNode)

}

}

class Node {

constructor(value) {

let left = undefined

let right = undefined

this.getValue = () => value

this.getLeft = () => left

this.getRight = () => right

this.setLeft = (node) => left = node

this.setRight = (node) => right = node

}

}

const tree = new BinaryTree(5) //Because the tree isn't self-balancing, this will keep it from being TOO lopsided.

tree.add(1)

tree.add(2)

tree.add(3)

tree.add(4)

tree.add(6)

tree.add(7)

tree.add(8)

tree.add(9)

tree.add(10)

Given our problem, let's quickly look at a solution algorithm. Given any given parent node, the sum is the value of the sum of it's left child, itself, and the sum of it's right child. When no children are present the assumed sum for that child is 0.

Assuming the given node is the parent of a tree, the logic tells us that the sum of that node is the sum of the entire tree where it's the parent. This is true for EVERY child node; or more plainly since every child node is the parent of it's own binary tree, the algorithm can be used recursively to identify the total sum of a binary tree.

"WOW, ok, so because every node is in itself a parent, every node can find the sum in the same way?" Yep! Exactly, well summed up! Now the we understand that, let's move on to the actual code. We'll start with our iterative solution:

const sumIterative = (node) => {

let currentNode = node;

const stack = []

let sum = 0

while(stack.length > 0 || currentNode) {

if (currentNode) {

stack.push(currentNode)

currentNode = currentNode.getLeft()

} else {

currentNode = stack.pop()

sum += currentNode.getValue()

currentNode = currentNode.getRight()

}

}

return sum

}

"WOAH! That's a ton of code, and it's so confusing! If the code for the imperative solution is this long then the recursive one must be MASSIVE!"

Given our past examples, you would think that! Let's see:

const sumRecursive = (node) => {

return !node

? 0

: sumRecursive(node.getLeft()) + node.getValue() + sumRecursive(node.getRight())

}

Huh. Not what you were expecting, right? We've gone from 16 lines down to 5, and really 3 if you one-line the ternary! What's more, the function is no longer a mess of confusing code and it now tells us exactly what it's doing: If a node exists return the sum of the left plus the value of the node plus the sum on the right. If it doesn't exist then return zero. That's exactly what we outlined in our planning, which is perfect. So we were able to describe a function, create the function, and execute it without any hassle. In situations like this the power of recursion becomes obvious.

So what's the catch?

And so it's come to this. We've spent all this time exploring the power of recursion so it's time to ask the age old question "So what's the catch?" And unfortunately it's a bit of a doozy.

Remember how I talked about call stacks and head/tail recursion? Let's revisit that. As you may know, the call stack is a runtime way of tracking all the function calls a program is making. As calls complete, they pop off the stack, just like you'd expect. Unfortunately, as I mentioned before, head recursion requires calls to stay on the stack until the base case resolves. But what if the base case doesn't resolve quickly? What if, for example, the Binary Tree is several thousand nodes large? 15000 nodes even? Unfortunately the call stack is not an infinite resource, and while he limit might very from language to language there is ALWAYS a limit. And once that limit is hit? Stack overflow.

Tail recursion helps with this. . . if the language supports Tail Optimization (TO). Effectively, TO allows the run-time to swap out the call stack frame with the next frame if it's the last thing to be executed in a function, but not only can tail recursion not solve entire classes of problems but many languages don't support TO anyway. Some languages (like Kotlin and Scala) allow you to flag tail recursive methods with an annotation which causes the compiler to try to change the call to a while loop, but that's a stopgap compared to true TO.

This issue forces us to reconsider when to use recursion. Sets of data need to be sufficiently small, or TO needs to be supported. If you can't promise �one or both of these then you shouldn't be using recursion.

In conclusion

Recursion:

- Can accomplish solving problems by solving the same problem but smaller over and over again

- Is a great tool for solving complex problems with minimal code

- Comes in two flavors, head and tail

- Is frequently dangerous due to stack overflows

Hopefully this has been a useful primer to recursion! There was a lot to go over so feel free to go back as much as you need. Recursion can be tricky to wrap your head around, so just keep at it. You'll notice I specifically did not solve Fibonacci for you, so try using it as an exercise to practice recursion!